|

Tizen Native API

5.5

|

The NNStreamer function provides interfaces to create and execute stream pipelines with neural networks and sensors.

Required Header

#include <nnstreamer/nnstreamer.h>

Overview

The NNStreamer function provides interfaces to create and execute stream pipelines with neural networks and sensors.

This function allows the following operations with NNStreamer:

- Create a stream pipeline with NNStreamer plugins, GStreamer plugins, and sensor/camera/mic inputs.

- Interfaces to push data to the pipeline from the application.

- Interfaces to pull data from the pipeline to the application.

- Interfaces to start/stop/destroy the pipeline.

- Interfaces to control switches and valves in the pipeline.

- Utility functions to handle data from the pipeline.

Note that this function set is supposed to be thread-safe.

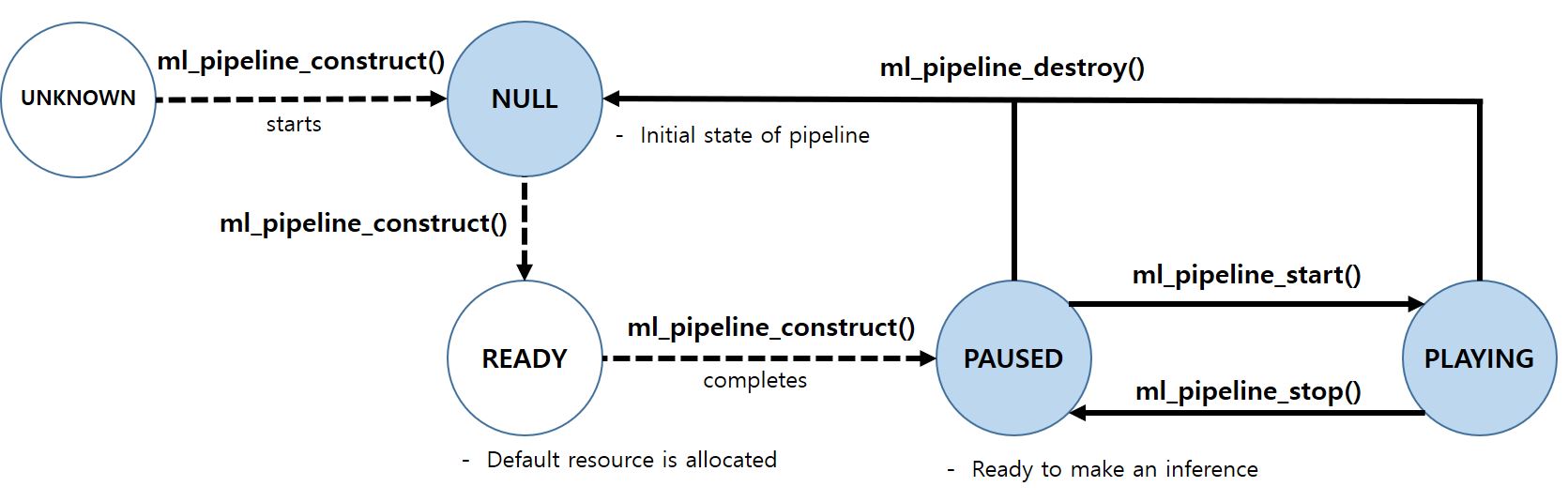

Pipeline State Diagram

Related Features

This function is related with the following features:

- http://tizen.org/feature/machine_learning

- http://tizen.org/feature/machine_learning.inference

It is recommended to probe features in your application for reliability.

You can check if a device supports the related features for this function by using System Information, thereby controlling the procedure of your application.

To ensure your application is only running on the device with specific features, please define the features in your manifest file using the manifest editor in the SDK.

For example, your application accesses to the camera device, then you have to add 'http://tizen.org/privilege/camera' into the manifest of your application.

More details on featuring your application can be found from Feature Element.

Functions | |

| int | ml_pipeline_construct (const char *pipeline_description, ml_pipeline_state_cb cb, void *user_data, ml_pipeline_h *pipe) |

| Constructs the pipeline (GStreamer + NNStreamer). | |

| int | ml_pipeline_destroy (ml_pipeline_h pipe) |

| Destroys the pipeline. | |

| int | ml_pipeline_get_state (ml_pipeline_h pipe, ml_pipeline_state_e *state) |

| Gets the state of pipeline. | |

| int | ml_pipeline_start (ml_pipeline_h pipe) |

| Starts the pipeline, asynchronously. | |

| int | ml_pipeline_stop (ml_pipeline_h pipe) |

| Stops the pipeline, asynchronously. | |

| int | ml_pipeline_sink_register (ml_pipeline_h pipe, const char *sink_name, ml_pipeline_sink_cb cb, void *user_data, ml_pipeline_sink_h *sink_handle) |

| Registers a callback for sink node of NNStreamer pipelines. | |

| int | ml_pipeline_sink_unregister (ml_pipeline_sink_h sink_handle) |

| Unregisters a callback for sink node of NNStreamer pipelines. | |

| int | ml_pipeline_src_get_handle (ml_pipeline_h pipe, const char *src_name, ml_pipeline_src_h *src_handle) |

| Gets a handle to operate as a src node of NNStreamer pipelines. | |

| int | ml_pipeline_src_release_handle (ml_pipeline_src_h src_handle) |

| Releases the given src handle. | |

| int | ml_pipeline_src_input_data (ml_pipeline_src_h src_handle, ml_tensors_data_h data, ml_pipeline_buf_policy_e policy) |

| Adds an input data frame. | |

| int | ml_pipeline_src_get_tensors_info (ml_pipeline_src_h src_handle, ml_tensors_info_h *info) |

| Gets a handle for the tensors information of given src node. | |

| int | ml_pipeline_switch_get_handle (ml_pipeline_h pipe, const char *switch_name, ml_pipeline_switch_e *switch_type, ml_pipeline_switch_h *switch_handle) |

| Gets a handle to operate a "GstInputSelector / GstOutputSelector" node of NNStreamer pipelines. | |

| int | ml_pipeline_switch_release_handle (ml_pipeline_switch_h switch_handle) |

| Releases the given switch handle. | |

| int | ml_pipeline_switch_select (ml_pipeline_switch_h switch_handle, const char *pad_name) |

| Controls the switch with the given handle to select input/output nodes(pads). | |

| int | ml_pipeline_switch_get_pad_list (ml_pipeline_switch_h switch_handle, char ***list) |

| Gets the pad names of a switch. | |

| int | ml_pipeline_valve_get_handle (ml_pipeline_h pipe, const char *valve_name, ml_pipeline_valve_h *valve_handle) |

| Gets a handle to operate a "GstValve" node of NNStreamer pipelines. | |

| int | ml_pipeline_valve_release_handle (ml_pipeline_valve_h valve_handle) |

| Releases the given valve handle. | |

| int | ml_pipeline_valve_set_open (ml_pipeline_valve_h valve_handle, bool open) |

| Controls the valve with the given handle. | |

| int | ml_tensors_info_create (ml_tensors_info_h *info) |

| Creates a tensors information handle with default value. | |

| int | ml_tensors_info_destroy (ml_tensors_info_h info) |

| Frees the given handle of a tensors information. | |

| int | ml_tensors_info_validate (const ml_tensors_info_h info, bool *valid) |

| Validates the given tensors information. | |

| int | ml_tensors_info_clone (ml_tensors_info_h dest, const ml_tensors_info_h src) |

| Copies the tensors information. | |

| int | ml_tensors_info_set_count (ml_tensors_info_h info, unsigned int count) |

| Sets the number of tensors with given handle of tensors information. | |

| int | ml_tensors_info_get_count (ml_tensors_info_h info, unsigned int *count) |

| Gets the number of tensors with given handle of tensors information. | |

| int | ml_tensors_info_set_tensor_name (ml_tensors_info_h info, unsigned int index, const char *name) |

| Sets the tensor name with given handle of tensors information. | |

| int | ml_tensors_info_get_tensor_name (ml_tensors_info_h info, unsigned int index, char **name) |

| Gets the tensor name with given handle of tensors information. | |

| int | ml_tensors_info_set_tensor_type (ml_tensors_info_h info, unsigned int index, const ml_tensor_type_e type) |

| Sets the tensor type with given handle of tensors information. | |

| int | ml_tensors_info_get_tensor_type (ml_tensors_info_h info, unsigned int index, ml_tensor_type_e *type) |

| Gets the tensor type with given handle of tensors information. | |

| int | ml_tensors_info_set_tensor_dimension (ml_tensors_info_h info, unsigned int index, const ml_tensor_dimension dimension) |

| Sets the tensor dimension with given handle of tensors information. | |

| int | ml_tensors_info_get_tensor_dimension (ml_tensors_info_h info, unsigned int index, ml_tensor_dimension dimension) |

| Gets the tensor dimension with given handle of tensors information. | |

| int | ml_tensors_info_get_tensor_size (ml_tensors_info_h info, int index, size_t *data_size) |

| Gets the size of tensors data in the given tensors information handle in bytes. | |

| int | ml_tensors_data_create (const ml_tensors_info_h info, ml_tensors_data_h *data) |

| Creates a tensor data frame with the given tensors information. | |

| int | ml_tensors_data_destroy (ml_tensors_data_h data) |

| Frees the given tensors' data handle. | |

| int | ml_tensors_data_get_tensor_data (ml_tensors_data_h data, unsigned int index, void **raw_data, size_t *data_size) |

| Gets a tensor data of given handle. | |

| int | ml_tensors_data_set_tensor_data (ml_tensors_data_h data, unsigned int index, const void *raw_data, const size_t data_size) |

| Copies a tensor data to given handle. | |

| int | ml_check_nnfw_availability (ml_nnfw_type_e nnfw, ml_nnfw_hw_e hw, bool *available) |

| Checks the availability of the given execution environments. | |

Typedefs | |

| typedef unsigned int | ml_tensor_dimension [(4)] |

| The dimensions of a tensor that NNStreamer supports. | |

| typedef void * | ml_tensors_info_h |

| A handle of a tensors metadata instance. | |

| typedef void * | ml_tensors_data_h |

| A handle of input or output frames. ml_tensors_info_h is the handle for tensors metadata. | |

| typedef void * | ml_pipeline_h |

| A handle of an NNStreamer pipeline. | |

| typedef void * | ml_pipeline_sink_h |

| A handle of a "sink node" of an NNStreamer pipeline. | |

| typedef void * | ml_pipeline_src_h |

| A handle of a "src node" of an NNStreamer pipeline. | |

| typedef void * | ml_pipeline_switch_h |

| A handle of a "switch" of an NNStreamer pipeline. | |

| typedef void * | ml_pipeline_valve_h |

| A handle of a "valve node" of an NNStreamer pipeline. | |

| typedef enum _ml_tensor_type_e | ml_tensor_type_e |

| Possible data element types of Tensor in NNStreamer. | |

| typedef void(* | ml_pipeline_sink_cb )(const ml_tensors_data_h data, const ml_tensors_info_h info, void *user_data) |

| Callback for sink element of NNStreamer pipelines (pipeline's output). | |

| typedef void(* | ml_pipeline_state_cb )(ml_pipeline_state_e state, void *user_data) |

| Callback for the change of pipeline state. | |

Defines | |

| #define | ML_TIZEN_CAM_VIDEO_SRC "tizencamvideosrc" |

| The virtual name to set the video source of camcorder in Tizen. | |

| #define | ML_TIZEN_CAM_AUDIO_SRC "tizencamaudiosrc" |

| The virtual name to set the audio source of camcorder in Tizen. | |

| #define | ML_TENSOR_RANK_LIMIT (4) |

| The maximum rank that NNStreamer supports with Tizen APIs. | |

| #define | ML_TENSOR_SIZE_LIMIT (16) |

| The maximum number of other/tensor instances that other/tensors may have. | |

Define Documentation

| #define ML_TENSOR_RANK_LIMIT (4) |

The maximum rank that NNStreamer supports with Tizen APIs.

- Since :

- 5.5

| #define ML_TENSOR_SIZE_LIMIT (16) |

The maximum number of other/tensor instances that other/tensors may have.

- Since :

- 5.5

| #define ML_TIZEN_CAM_AUDIO_SRC "tizencamaudiosrc" |

The virtual name to set the audio source of camcorder in Tizen.

If an application needs to access the recorder device to construct the pipeline, set the virtual name as an audio source element. Note that you have to add 'http://tizen.org/privilege/recorder' into the manifest of your application.

- Since :

- 5.5

| #define ML_TIZEN_CAM_VIDEO_SRC "tizencamvideosrc" |

The virtual name to set the video source of camcorder in Tizen.

If an application needs to access the camera device to construct the pipeline, set the virtual name as a video source element. Note that you have to add 'http://tizen.org/privilege/camera' into the manifest of your application.

- Since :

- 5.5

Typedef Documentation

| typedef void* ml_pipeline_h |

A handle of an NNStreamer pipeline.

- Since :

- 5.5

| typedef void(* ml_pipeline_sink_cb)(const ml_tensors_data_h data, const ml_tensors_info_h info, void *user_data) |

Callback for sink element of NNStreamer pipelines (pipeline's output).

If an application wants to accept data outputs of an NNStreamer stream, use this callback to get data from the stream. Note that the buffer may be deallocated after the return and this is synchronously called. Thus, if you need the data afterwards, copy the data to another buffer and return fast. Do not spend too much time in the callback. It is recommended to use very small tensors at sinks.

- Since :

- 5.5

- Remarks:

- The data can be used only in the callback. To use outside, make a copy.

- The info can be used only in the callback. To use outside, make a copy.

- Parameters:

-

[out] data The handle of the tensor output (a single frame. tensor/tensors). Number of tensors is determined by ml_tensors_info_get_count() with the handle 'info'. Note that max num_tensors is 16 (ML_TENSOR_SIZE_LIMIT). [out] info The handle of tensors information (cardinality, dimension, and type of given tensor/tensors). [out] user_data User application's private data.

| typedef void* ml_pipeline_sink_h |

A handle of a "sink node" of an NNStreamer pipeline.

- Since :

- 5.5

| typedef void* ml_pipeline_src_h |

A handle of a "src node" of an NNStreamer pipeline.

- Since :

- 5.5

| typedef void(* ml_pipeline_state_cb)(ml_pipeline_state_e state, void *user_data) |

Callback for the change of pipeline state.

If an application wants to get the change of pipeline state, use this callback. This callback can be registered when constructing the pipeline using ml_pipeline_construct(). Do not spend too much time in the callback.

- Since :

- 5.5

- Parameters:

-

[out] state The new state of the pipeline. [out] user_data User application's private data.

| typedef void* ml_pipeline_switch_h |

A handle of a "switch" of an NNStreamer pipeline.

- Since :

- 5.5

| typedef void* ml_pipeline_valve_h |

A handle of a "valve node" of an NNStreamer pipeline.

- Since :

- 5.5

| typedef unsigned int ml_tensor_dimension[(4)] |

The dimensions of a tensor that NNStreamer supports.

- Since :

- 5.5

| typedef enum _ml_tensor_type_e ml_tensor_type_e |

Possible data element types of Tensor in NNStreamer.

- Since :

- 5.5

| typedef void* ml_tensors_data_h |

A handle of input or output frames. ml_tensors_info_h is the handle for tensors metadata.

- Since :

- 5.5

| typedef void* ml_tensors_info_h |

A handle of a tensors metadata instance.

- Since :

- 5.5

Enumeration Type Documentation

| enum _ml_tensor_type_e |

Possible data element types of Tensor in NNStreamer.

- Since :

- 5.5

- Enumerator:

| enum ml_error_e |

Enumeration for the error codes of NNStreamer Pipelines.

- Since :

- 5.5

- Enumerator:

| enum ml_nnfw_hw_e |

Types of hardware resources to be used for NNFWs. Note that if the affinity (nnn) is not supported by the driver or hardware, it is ignored.

- Since :

- 5.5

| enum ml_nnfw_type_e |

Types of NNFWs.

- Since :

- 5.5

- Enumerator:

Enumeration for buffer deallocation policies.

- Since :

- 5.5

- Enumerator:

| enum ml_pipeline_state_e |

Enumeration for pipeline state.

The pipeline state is described on Pipeline State Diagram. Refer to https://gstreamer.freedesktop.org/documentation/plugin-development/basics/states.html.

- Since :

- 5.5

| enum ml_pipeline_switch_e |

Function Documentation

| int ml_check_nnfw_availability | ( | ml_nnfw_type_e | nnfw, |

| ml_nnfw_hw_e | hw, | ||

| bool * | available | ||

| ) |

Checks the availability of the given execution environments.

If the function returns an error, available is not changed.

- Since :

- 5.5

- Parameters:

-

[in] nnfw Check if the nnfw is available in the system. Set ML_NNFW_TYPE_ANY to skip checking nnfw. [in] hw Check if the hardware is available in the system. Set ML_NNFW_HW_ANY to skip checking hardware. [out] available trueif it's available,falseif if it's not available.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful and the environments are available. ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_pipeline_construct | ( | const char * | pipeline_description, |

| ml_pipeline_state_cb | cb, | ||

| void * | user_data, | ||

| ml_pipeline_h * | pipe | ||

| ) |

Constructs the pipeline (GStreamer + NNStreamer).

Use this function to create gst_parse_launch compatible NNStreamer pipelines.

- Since :

- 5.5

- Remarks:

- If the function succeeds, pipe handle must be released using ml_pipeline_destroy().

- http://tizen.org/privilege/mediastorage is needed if pipeline_description is relevant to media storage.

- http://tizen.org/privilege/externalstorage is needed if pipeline_description is relevant to external storage.

- http://tizen.org/privilege/camera is needed if pipeline_description accesses the camera device.

- http://tizen.org/privilege/recorder is needed if pipeline_description accesses the recorder device.

- Parameters:

-

[in] pipeline_description The pipeline description compatible with GStreamer gst_parse_launch(). Refer to GStreamer manual or NNStreamer (https://github.com/nnsuite/nnstreamer) documentation for examples and the grammar. [in] cb The function to be called when the pipeline state is changed. You may set NULL if it's not required. [in] user_data Private data for the callback. This value is passed to the callback when it's invoked. [out] pipe The NNStreamer pipeline handler from the given description.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid. (Pipeline is not negotiated yet.) ML_ERROR_STREAMS_PIPE Pipeline construction is failed because of wrong parameter or initialization failure. ML_ERROR_PERMISSION_DENIED The application does not have the required privilege to access to the media storage, external storage, microphone, or camera.

- Precondition:

- The pipeline state should be ML_PIPELINE_STATE_UNKNOWN or ML_PIPELINE_STATE_NULL.

- Postcondition:

- The pipeline state will be ML_PIPELINE_STATE_PAUSED in the same thread.

| int ml_pipeline_destroy | ( | ml_pipeline_h | pipe | ) |

Destroys the pipeline.

Use this function to destroy the pipeline constructed with ml_pipeline_construct().

- Since :

- 5.5

- Parameters:

-

[in] pipe The pipeline to be destroyed.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER The parameter is invalid (Pipeline is not negotiated yet.) ML_ERROR_STREAMS_PIPE Cannot access the pipeline status.

- Precondition:

- The pipeline state should be ML_PIPELINE_STATE_PLAYING or ML_PIPELINE_STATE_PAUSED.

- Postcondition:

- The pipeline state will be ML_PIPELINE_STATE_NULL.

| int ml_pipeline_get_state | ( | ml_pipeline_h | pipe, |

| ml_pipeline_state_e * | state | ||

| ) |

Gets the state of pipeline.

Gets the state of the pipeline handle returned by ml_pipeline_construct().

- Since :

- 5.5

- Parameters:

-

[in] pipe The pipeline handle. [out] state The pipeline state.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid. (Pipeline is not negotiated yet.) ML_ERROR_STREAMS_PIPE Failed to get state from the pipeline.

| int ml_pipeline_sink_register | ( | ml_pipeline_h | pipe, |

| const char * | sink_name, | ||

| ml_pipeline_sink_cb | cb, | ||

| void * | user_data, | ||

| ml_pipeline_sink_h * | sink_handle | ||

| ) |

Registers a callback for sink node of NNStreamer pipelines.

- Since :

- 5.5

- Remarks:

- If the function succeeds, sink_handle handle must be unregistered using ml_pipeline_sink_unregister().

- Parameters:

-

[in] pipe The pipeline to be attached with a sink node. [in] sink_name The name of sink node, described with ml_pipeline_construct(). [in] cb The function to be called by the sink node. [in] user_data Private data for the callback. This value is passed to the callback when it's invoked. [out] sink_handle The sink handle.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid. (Not negotiated, sink_name is not found, or sink_name has an invalid type.) ML_ERROR_STREAMS_PIPE Failed to connect a signal to sink element.

- Precondition:

- The pipeline state should be ML_PIPELINE_STATE_PAUSED.

| int ml_pipeline_sink_unregister | ( | ml_pipeline_sink_h | sink_handle | ) |

Unregisters a callback for sink node of NNStreamer pipelines.

- Since :

- 5.5

- Parameters:

-

[in] sink_handle The sink handle to be unregistered.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

- Precondition:

- The pipeline state should be ML_PIPELINE_STATE_PAUSED.

| int ml_pipeline_src_get_handle | ( | ml_pipeline_h | pipe, |

| const char * | src_name, | ||

| ml_pipeline_src_h * | src_handle | ||

| ) |

Gets a handle to operate as a src node of NNStreamer pipelines.

- Since :

- 5.5

- Remarks:

- If the function succeeds, src_handle handle must be released using ml_pipeline_src_release_handle().

- Parameters:

-

[in] pipe The pipeline to be attached with a src node. [in] src_name The name of src node, described with ml_pipeline_construct(). [out] src_handle The src handle.

- Returns:

- 0 on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid. ML_ERROR_STREAMS_PIPE Fail to get SRC element. ML_ERROR_TRY_AGAIN The pipeline is not ready yet.

| int ml_pipeline_src_get_tensors_info | ( | ml_pipeline_src_h | src_handle, |

| ml_tensors_info_h * | info | ||

| ) |

Gets a handle for the tensors information of given src node.

If the mediatype is not other/tensor or other/tensors, info handle may not be correct. If want to use other media types, you MUST set the correct properties.

- Since :

- 5.5

- Remarks:

- If the function succeeds, info handle must be released using ml_tensors_info_destroy().

- Parameters:

-

[in] src_handle The source handle returned by ml_pipeline_src_get_handle(). [out] info The handle of tensors information.

- Returns:

- 0 on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid. ML_ERROR_STREAMS_PIPE The pipeline has inconsistent padcaps. (Pipeline is not negotiated yet.) ML_ERROR_TRY_AGAIN The pipeline is not ready yet.

| int ml_pipeline_src_input_data | ( | ml_pipeline_src_h | src_handle, |

| ml_tensors_data_h | data, | ||

| ml_pipeline_buf_policy_e | policy | ||

| ) |

Adds an input data frame.

- Since :

- 5.5

- Parameters:

-

[in] src_handle The source handle returned by ml_pipeline_src_get_handle(). [in] data The handle of input tensors, in the format of tensors info given by ml_pipeline_src_get_tensors_info(). This function takes ownership of the data if policy is ML_PIPELINE_BUF_POLICY_AUTO_FREE. [in] policy The policy of buf deallocation.

- Returns:

- 0 on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid. ML_ERROR_STREAMS_PIPE The pipeline has inconsistent padcaps. (Pipeline is not negotiated yet.) ML_ERROR_TRY_AGAIN The pipeline is not ready yet.

| int ml_pipeline_src_release_handle | ( | ml_pipeline_src_h | src_handle | ) |

Releases the given src handle.

- Since :

- 5.5

- Parameters:

-

[in] src_handle The src handle to be released.

- Returns:

- 0 on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_pipeline_start | ( | ml_pipeline_h | pipe | ) |

Starts the pipeline, asynchronously.

The pipeline handle returned by ml_pipeline_construct() is started. Note that this is asynchronous function. State might be "pending". If you need to get the changed state, add a callback while constructing a pipeline with ml_pipeline_construct().

- Since :

- 5.5

- Parameters:

-

[in] pipe The pipeline handle.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid. (NPipeline is not negotiated yet.) ML_ERROR_STREAMS_PIPE Failed to start the pipeline.

- Precondition:

- The pipeline state should be ML_PIPELINE_STATE_PAUSED.

- Postcondition:

- The pipeline state will be ML_PIPELINE_STATE_PLAYING.

| int ml_pipeline_stop | ( | ml_pipeline_h | pipe | ) |

Stops the pipeline, asynchronously.

The pipeline handle returned by ml_pipeline_construct() is stopped. Note that this is asynchronous function. State might be "pending". If you need to get the changed state, add a callback while constructing a pipeline with ml_pipeline_construct().

- Since :

- 5.5

- Parameters:

-

[in] pipe The pipeline to be stopped.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid. (Pipeline is not negotiated yet.) ML_ERROR_STREAMS_PIPE Failed to stop the pipeline.

- Precondition:

- The pipeline state should be ML_PIPELINE_STATE_PLAYING.

- Postcondition:

- The pipeline state will be ML_PIPELINE_STATE_PAUSED.

| int ml_pipeline_switch_get_handle | ( | ml_pipeline_h | pipe, |

| const char * | switch_name, | ||

| ml_pipeline_switch_e * | switch_type, | ||

| ml_pipeline_switch_h * | switch_handle | ||

| ) |

Gets a handle to operate a "GstInputSelector / GstOutputSelector" node of NNStreamer pipelines.

Refer to https://gstreamer.freedesktop.org/data/doc/gstreamer/head/gstreamer-plugins/html/gstreamer-plugins-input-selector.html for input selectors. Refer to https://gstreamer.freedesktop.org/data/doc/gstreamer/head/gstreamer-plugins/html/gstreamer-plugins-output-selector.html for output selectors.

- Since :

- 5.5

- Remarks:

- If the function succeeds, switch_handle handle must be released using ml_pipeline_switch_release_handle().

- Parameters:

-

[in] pipe The pipeline to be managed. [in] switch_name The name of switch (InputSelector/OutputSelector). [out] switch_type The type of the switch. If NULL, it is ignored. [out] switch_handle The switch handle.

- Returns:

- 0 on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_pipeline_switch_get_pad_list | ( | ml_pipeline_switch_h | switch_handle, |

| char *** | list | ||

| ) |

Gets the pad names of a switch.

- Since :

- 5.5

- Remarks:

- If the function succeeds, list and its contents should be released using g_free(). Refer the below sample code.

- Parameters:

-

[in] switch_handle The switch handle returned by ml_pipeline_switch_get_handle(). [out] list NULL terminated array of char*. The caller must free each string (char*) in the list and free the list itself.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid. ML_ERROR_STREAMS_PIPE The element is not both input and output switch (Internal data inconsistency).

Here is an example of the usage:

int status; gchar *pipeline; ml_pipeline_h handle; ml_pipeline_switch_e switch_type; ml_pipeline_switch_h switch_handle; gchar **node_list = NULL; // pipeline description pipeline = g_strdup ("videotestsrc is-live=true ! videoconvert ! tensor_converter ! output-selector name=outs " "outs.src_0 ! tensor_sink name=sink0 async=false " "outs.src_1 ! tensor_sink name=sink1 async=false"); status = ml_pipeline_construct (pipeline, NULL, NULL, &handle); if (status != ML_ERROR_NONE) { // handle error case goto error; } status = ml_pipeline_switch_get_handle (handle, "outs", &switch_type, &switch_handle); if (status != ML_ERROR_NONE) { // handle error case goto error; } status = ml_pipeline_switch_get_pad_list (switch_handle, &node_list); if (status != ML_ERROR_NONE) { // handle error case goto error; } if (node_list) { gchar *name = NULL; guint idx = 0; while ((name = node_list[idx++]) != NULL) { // node name is 'src_0' or 'src_1' // release name g_free (name); } // release list of switch pads g_free (node_list); } error: ml_pipeline_switch_release_handle (switch_handle); ml_pipeline_destroy (handle); g_free (pipeline);

| int ml_pipeline_switch_release_handle | ( | ml_pipeline_switch_h | switch_handle | ) |

Releases the given switch handle.

- Since :

- 5.5

- Parameters:

-

[in] switch_handle The handle to be released.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_pipeline_switch_select | ( | ml_pipeline_switch_h | switch_handle, |

| const char * | pad_name | ||

| ) |

Controls the switch with the given handle to select input/output nodes(pads).

- Since :

- 5.5

- Parameters:

-

[in] switch_handle The switch handle returned by ml_pipeline_switch_get_handle(). [in] pad_name The name of the chosen pad to be activated. Use ml_pipeline_switch_get_pad_list() to list the available pad names.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_pipeline_valve_get_handle | ( | ml_pipeline_h | pipe, |

| const char * | valve_name, | ||

| ml_pipeline_valve_h * | valve_handle | ||

| ) |

Gets a handle to operate a "GstValve" node of NNStreamer pipelines.

Refer to https://gstreamer.freedesktop.org/data/doc/gstreamer/head/gstreamer-plugins/html/gstreamer-plugins-valve.html for more info.

- Since :

- 5.5

- Remarks:

- If the function succeeds, valve_handle handle must be released using ml_pipeline_valve_release_handle().

- Parameters:

-

[in] pipe The pipeline to be managed. [in] valve_name The name of valve (Valve). [out] valve_handle The valve handle.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_pipeline_valve_release_handle | ( | ml_pipeline_valve_h | valve_handle | ) |

Releases the given valve handle.

- Since :

- 5.5

- Parameters:

-

[in] valve_handle The handle to be released.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_pipeline_valve_set_open | ( | ml_pipeline_valve_h | valve_handle, |

| bool | open | ||

| ) |

Controls the valve with the given handle.

- Since :

- 5.5

- Parameters:

-

[in] valve_handle The valve handle returned by ml_pipeline_valve_get_handle(). [in] open trueto open(let the flow pass),falseto close (drop & stop the flow).

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_data_create | ( | const ml_tensors_info_h | info, |

| ml_tensors_data_h * | data | ||

| ) |

Creates a tensor data frame with the given tensors information.

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information for the allocation. [out] data The handle of tensors data. The caller is responsible for freeing the allocated data with ml_tensors_data_destroy().

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid. ML_ERROR_STREAMS_PIPE Failed to allocate new memory.

| int ml_tensors_data_destroy | ( | ml_tensors_data_h | data | ) |

Frees the given tensors' data handle.

- Since :

- 5.5

- Parameters:

-

[in] data The handle of tensors data.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_data_get_tensor_data | ( | ml_tensors_data_h | data, |

| unsigned int | index, | ||

| void ** | raw_data, | ||

| size_t * | data_size | ||

| ) |

Gets a tensor data of given handle.

This returns the pointer of memory block in the handle. Do not deallocate the returned tensor data.

- Since :

- 5.5

- Parameters:

-

[in] data The handle of tensors data. [in] index The index of the tensor. [out] raw_data Raw tensor data in the handle. [out] data_size Byte size of tensor data.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_data_set_tensor_data | ( | ml_tensors_data_h | data, |

| unsigned int | index, | ||

| const void * | raw_data, | ||

| const size_t | data_size | ||

| ) |

Copies a tensor data to given handle.

- Since :

- 5.5

- Parameters:

-

[in] data The handle of tensors data. [in] index The index of the tensor. [in] raw_data Raw tensor data to be copied. [in] data_size Byte size of raw data.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_clone | ( | ml_tensors_info_h | dest, |

| const ml_tensors_info_h | src | ||

| ) |

Copies the tensors information.

- Since :

- 5.5

- Parameters:

-

[out] dest A destination handle of tensors information. [in] src The tensors information to be copied.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_create | ( | ml_tensors_info_h * | info | ) |

Creates a tensors information handle with default value.

- Since :

- 5.5

- Parameters:

-

[out] info The handle of tensors information.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_destroy | ( | ml_tensors_info_h | info | ) |

Frees the given handle of a tensors information.

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information.

- Returns:

- 0 on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_get_count | ( | ml_tensors_info_h | info, |

| unsigned int * | count | ||

| ) |

Gets the number of tensors with given handle of tensors information.

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information. [out] count The number of tensors.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_get_tensor_dimension | ( | ml_tensors_info_h | info, |

| unsigned int | index, | ||

| ml_tensor_dimension | dimension | ||

| ) |

Gets the tensor dimension with given handle of tensors information.

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information. [in] index The index of the tensor. [out] dimension The tensor dimension.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_get_tensor_name | ( | ml_tensors_info_h | info, |

| unsigned int | index, | ||

| char ** | name | ||

| ) |

Gets the tensor name with given handle of tensors information.

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information. [in] index The index of the tensor. [out] name The tensor name.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_get_tensor_size | ( | ml_tensors_info_h | info, |

| int | index, | ||

| size_t * | data_size | ||

| ) |

Gets the size of tensors data in the given tensors information handle in bytes.

If an application needs to get the total byte size of tensors, set the index '-1'. Note that the maximum number of tensors is 16 (ML_TENSOR_SIZE_LIMIT).

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information. [in] index The index of the tensor. [out] data_size The byte size of tensor data.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_get_tensor_type | ( | ml_tensors_info_h | info, |

| unsigned int | index, | ||

| ml_tensor_type_e * | type | ||

| ) |

Gets the tensor type with given handle of tensors information.

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information. [in] index The index of the tensor. [out] type The tensor type.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_set_count | ( | ml_tensors_info_h | info, |

| unsigned int | count | ||

| ) |

Sets the number of tensors with given handle of tensors information.

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information. [in] count The number of tensors.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_set_tensor_dimension | ( | ml_tensors_info_h | info, |

| unsigned int | index, | ||

| const ml_tensor_dimension | dimension | ||

| ) |

Sets the tensor dimension with given handle of tensors information.

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information. [in] index The index of the tensor to be updated. [in] dimension The tensor dimension to be set.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_set_tensor_name | ( | ml_tensors_info_h | info, |

| unsigned int | index, | ||

| const char * | name | ||

| ) |

Sets the tensor name with given handle of tensors information.

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information. [in] index The index of the tensor to be updated. [in] name The tensor name to be set.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_set_tensor_type | ( | ml_tensors_info_h | info, |

| unsigned int | index, | ||

| const ml_tensor_type_e | type | ||

| ) |

Sets the tensor type with given handle of tensors information.

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information. [in] index The index of the tensor to be updated. [in] type The tensor type to be set.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.

| int ml_tensors_info_validate | ( | const ml_tensors_info_h | info, |

| bool * | valid | ||

| ) |

Validates the given tensors information.

If the function returns an error, valid is not changed.

- Since :

- 5.5

- Parameters:

-

[in] info The handle of tensors information to be validated. [out] valid trueif it's valid,falseif if it's invalid.

- Returns:

0on success. Otherwise a negative error value.

- Return values:

-

ML_ERROR_NONE Successful ML_ERROR_NOT_SUPPORTED Not supported. ML_ERROR_INVALID_PARAMETER Given parameter is invalid.