The Voice Recorder sample application demonstrates how you can record and play voice using a wearable device. The recording time is limited to 10 seconds.

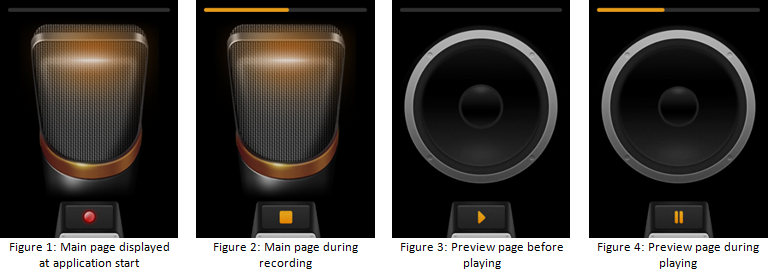

The following figure illustrates the main screens of the Voice Recorder.

Figure: Voice Recorder screens

When the application is opened, it displays the main page with record button at the bottom of the screen.

Tap on the record button at the bottom of the screen to make a voice recording. The application starts recording, and the record button is replaced by the stop button. The recording state is indicated the progress bar at the top of the screen.

Tap on the stop button to stop recording (recording stops automatically after 10 seconds). The application saves the recorded voice and displays a preview page. The stop button is replaced by a play button.

Tap on the play button to play the recorded voice. The application starts playback, and the play button is replaced by the pause button. The playback state is indicated by the progress bar at the top of the screen.

Tap on the pause button to stop playing the recorded voice. The application stops playing, and the pause button is replaced by the play button.

Perform swipe down gesture in order to move back to the main page.

Source Files

You can create and view the sample application project including the source files in the IDE.

| File name | Description |

|---|---|

| config.xml | This file contains the application information for the platform to install and launch the application, including the view mode and the icon to be used in the device menu. |

| css/style.css | This file contains the CSS styling for the application UI. |

| images/ | This directory contains the images used to create the user interface. |

| index.html | This is a starting file from which the application starts loading. It contains the layout of the application screens. |

| js/app.js | This file contains the code for the main application module used for initialization. |

| js/core/ | This directory contains the core modules. They are used in other parts of the application code. Core.js implements a simple AMD (Asynchronous Module Definition) and specifies module defining. The application uses a simple MV (Model View) architecture. |

| js/helpers/ | This directory contains files for implementing the helper functions. |

| js/models/ | This directory contains files that define an abstract layer over the Camera API. |

| js/views/ | This directory contains the code for handling the UI events for all pages. |

| lib/tau/ | This directory contains the external libraries (TAU library). |

Implementation

The application code is separated into modules. Each module specifies its dependent modules.

The entry point for the application is the js/app.js module. It is loaded first by the js/core/core.js library with the data-main attribute help.

<!--index.html--> <script src="./js/core/core.js" data-main="./js/app.js"></script>

The js/app.js module is responsible for the load view initialization module views/init by adding it as a dependent module in the required array.

/* js/app.js */

define(

{

name: 'app',

requires:['views/init'],

def: function appInit()

{

'use strict';

function init()

{

console.log('app::init');

}

return

{

init: init

};

}

});

The views/init module loads other view modules as dependencies to let them initialize. It handles the Tizen hardware keys, preloads the images used by application, and checks the battery state. It also notifies the other modules about the application visibility changes and application state changes on window blur event.

/* js/views/init.js */

define(

{

name: 'views/init',

requires:

[

'core/event',

'core/application',

'core/systeminfo',

'views/main',

'views/preview'

],

def: function viewsInit(req)

{

'use strict';

var e = req.core.event,

app = req.core.application,

sysInfo = req.core.systeminfo,

imagesToPreload =

[

'images/button_off.png',

'images/button_on.png',

'images/pause_icon.png',

'images/play_icon.png',

'images/record_icon.png',

'images/stop_icon.png',

'images/microphone_full.jpg',

'images/speaker_full.jpg',

'images/speaker_animate.png'

];

/**

* Handles the tizenhwkey event

* @param {event} ev

*/

function onHardwareKeysTap(ev)

{

var keyName = ev.keyName,

page = document.getElementsByClassName('ui-page-active')[0],

pageid = (page && page.id) || '';

if (keyName === 'back')

{

if (pageid === 'main')

{

app.exit();

}

else

{

history.back();

}

}

}

/* Pre-loads the images */

function preloadImages()

{

var image = null,

i = 0,

length = imagesToPreload.length;

for (i = 0; i < length; i += 1)

{

image = new window.Image();

image.src = imagesToPreload[i];

}

}

/* Handles the core.battery.low event */

function onLowBattery()

{

app.exit();

}

/**

* Handles the visibilitychange event

* @param {event} ev

*/

function onVisibilityChange(ev)

{

e.fire('visibility.change', ev);

}

/* Handles the window blur event */

function onBlur()

{

e.fire('application.state.background');

}

/* Registers the event listeners */

function bindEvents()

{

document.addEventListener('tizenhwkey', onHardwareKeysTap);

document.addEventListener('visibilitychange', onVisibilityChange);

window.addEventListener('blur', onBlur);

sysInfo.listenBatteryLowState();

}

/* Initializes the module */

function init()

{

preloadImages();

sysInfo.checkBatteryLowState();

bindEvents();

}

e.listeners(

{

'core.systeminfo.battery.low': onLowBattery

});

return

{

init: init

};

}

});

The views/main module handles most of the application UI interaction. It captures the audio stream and allows the user to record it. To handle this task, it processes the user actions and uses the models/audio module to handle them.

When the application starts or goes foreground, it obtains the audio stream with the models/steam module help, and initializes the models/audio module which uses Camera API to create the CameraControl object.

/* js/views/main.js */

/**

* Handles the models.stream.ready event

* @param {event} ev

*/

function onStreamReady(ev)

{

stream = ev.detail.stream;

a.registerStream(stream);

}

/* Initializes the stream */

function initStream()

{

s.getStream();

}

/**

* Function called when the application visibility state changes

* (document.visibilityState changed to 'visible' or 'hidden').

*/

function visibilityChange()

{

if (document.visibilityState !== 'visible')

{

if (a.isReady())

{

a.stopRecording();

a.release();

}

}

else

{

if (!a.isReady())

{

initStream();

}

}

}

/* Initializes the module */

function init()

{

initStream();

}

e.listeners(

{

'models.stream.ready': onStreamReady,

'views.init.visibility.change': visibilityChange,

});

/* js/models/stream.js */

/**

* Fires the models.stream.ready event onGetUserMediaSuccess

* @param {LocalMediaStream} stream

*/

function onUserMediaSuccess(stream)

{

initAttemtps = 0;

e.fire('ready', {stream: stream});

}

function getUserMedia(onUserMediaSuccess, onUserMediaError)

{

navigator.webkitGetUserMedia(

{

video: false,

audio: true

},

onUserMediaSuccess,

onUserMediaError);

}

function getStream()

{

return getUserMedia(onUserMediaSuccess, onUserMediaError);

}

/* js/models/audio.js */

/**

* Executes when audio control is created from the stream

* @param {audioControl} control

*/

function onAudioControlCreated(control)

{

audioControl = control;

e.fire('ready');

}

/**

* Executes on audio control creation error

* @param {object} error

*/

function onAudioControlError(error)

{

console.error(error);

e.fire('error', {error: error});

}

/**

* Registers the stream that the audio controls

* @param {LocalMediaStream} mediaStream

*/

function registerStream(mediaStream)

{

navigator.tizCamera.createCameraControl(mediaStream, onAudioControlCreated, onAudioControlError);

}

The models/audio module encapsulates the Camera API and creates an event-driven interface for the view modules.

The main functions exposed by this module are:

- registerStream – initializes the module and creates the CameraControl object. Fires the following events:

- models.audio.ready – the module is ready to use.

- models.audio.error – an error occurred during initialization.

- release – releases the CameraControl object. Fires the following events:

- models.audio.release – the camera was released

- startRecording – starts the recording. Fires the following events:

- models.audio.recording.start – the request is accepted, the recording starts

- models.audio.recording.error – an error occurred during initialization of recording

- stopRecording – stops the recording. Fires the following events:

- models.audio.recording.done – an audio file is successfully created (filename in events details). This event is fired also when the time limit for the video recording is exceeded

- models.audio.recording.error – an error occurred during ending the recording

- getRecordingTime – returns the current recording time

The view modules call the models/audio module functions and listen to its events to update the UI.

The voice recording process starts when user touches the record button. The views/main module calls the startRecording function of the models/audio module when a click event is detected.

/* js/views/main.js */

/* Starts the audio recording */

function startRecording()

{

recordingLock = true;

a.startRecording();

resetRecordingProgress();

showRecordingView();

}

/* Stops the audio recording */

function stopRecording()

{

recordingLock = true;

a.stopRecording();

}

/* Starts or stops the audio recording */

function setRecording()

{

if (recording)

{

startRecording();

}

else

{

stopRecording();

}

}

/* Handles the click event on record button */

function onRecordBtnClick()

{

if (recordingLock || document.hidden)

{

return;

}

toggleRecording();

setRecording();

}

/* Registers the event listeners */

function bindEvents()

{

recordBtnMap.addEventListener('click', onRecordBtnClick);

}

The models/audio module starts processing the request. It applies the audio file settings (CameraControl.recorder.applySettings) and starts recording (CameraControl.recorder.start). If there is no error, it starts tracing the recording time and notifies the other modules about the finished operation (by models.audio.recording.start event).

/* js/models/audio.js */

/* Executes when the recording starts successfully */

function onRecordingStartSuccess()

{

startTracingAudioLength();

e.fire('recording.start');

}

/**

* Executes when an error occurs during the recording start

* @param {object} error

*/

function onRecordingStartError(error)

{

busy = false;

e.fire('recording.error', {error: error});

}

/**

* Executes when an error occurs during the recording start

* @param {object} error

*/

function onRecordingStartError(error)

{

busy = false;

e.fire('recording.error', {error: error});

}

/* Executes when the audio settings are applied */

function onAudioSettingsApplied()

{

if (!stopRequested)

{

audioControl.recorder.start(onRecordingStartSuccess, onRecordingStartError);

}

else

{

e.fire('recording.cancel');

}

}

/**

* Starts the audio recording

* When recording is started successfully, audio.recording.start event

* is fired. If error occurs, audio.recording.error event is fired.

* @return {boolean} If process starts true is returned,

* false otherwise (audio other operation is in progress).

*/

function startRecording()

{

var settings = {},

fileName = '';

if (busy)

{

return false;

}

stopRequested = false;

busy = true;

fileName = createAudioFileName();

audioPath = AUDIO_DESTINATION_DIRECTORY + '/' + fileName;

settings.fileName = fileName;

settings.recordingFormat = getRecordingFormat();

audioControl.recorder.applySettings(settings, onAudioSettingsApplied, onAudioSettingsError);

return true;

}

When the user touches the stop button or when the audio file time limit is reached, the stopRecording function of the models/audio module is called. It requests the CameraControl object to stop the current recording and finally fires the models.audio.recording.done event with the audio filename as its details. The views/main module listens to this event and opens a separate view to allow the user to preview the recorded file.

/* js/models/audio.js */

/* Executes when the audio recording stops successfully */

function onAudioRecordingStopSuccess()

{

busy = false;

e.fire('recording.done', {path: audioPath});

audioRecordingTime = 0;

}

/**

* Executes when the audio recording stop fails

* @param {object} error

*/

function onAudioRecordingStopError(error)

{

busy = false;

e.fire('recording.error', {error: error});

audioRecordingTime = 0;

}

/**

* Stops the audio recording

* When recording is stopped, audio.recording.done event is fired

* with file path as a data.

* If error occurs audio.recording error is fired.

* Recording will stop also if MAX_RECORDING_TIME will be reached.

*/

function stopRecording()

{

stopRequested = true;

if (isRecording())

{

stopTracingAudioLength();

audioControl.recorder.stop(onAudioRecordingStopSuccess, onAudioRecordingStopError);

}

}

/**

* Checks whether the audio length is greater than the MAX_RECORDING_TIME

* If it does, recording will be stopped.

*/

function checkAudioLength()

{

var currentTime = new Date();

audioRecordingTime = currentTime - audioRecordingStartTime;

if (audioRecordingTime > MAX_RECORDING_TIME)

{

stopRecording();

}

}

/* js/views/main.js */

/**

* Handles the audio.recording.done event

* @param {event} ev

*/

function onRecordingDone(ev)

{

var path = ev.detail.path;

removeRecordingInterval();

toggleRecording(false);

updateRecordingProgress();

if (!exitInProgress)

{

e.fire('show.preview', {audio: path});

}

}

e.listeners(

{

'models.audio.recording.done': onRecordingDone

});

/* js/views/preview.js */

/**

* Shows preview page.

* @param {event} ev

*/

function showPreviewPage(ev)

{

tau.changePage('#preview', {transition: 'none'});

ev.target.removeEventListener(ev.type, showPreviewPage);

}

/**

* Handles the views.settings.show event

* @param {event} ev

*/

function show(ev)

{

var detail = ev.detail;

prevProgressVal.style.width = '0';

audioPlayState = false;

audio.src = detail.audio;

audio.addEventListener('loadeddata', showPreviewPage);

}

e.listeners(

{

'views.main.show.preview': show

});